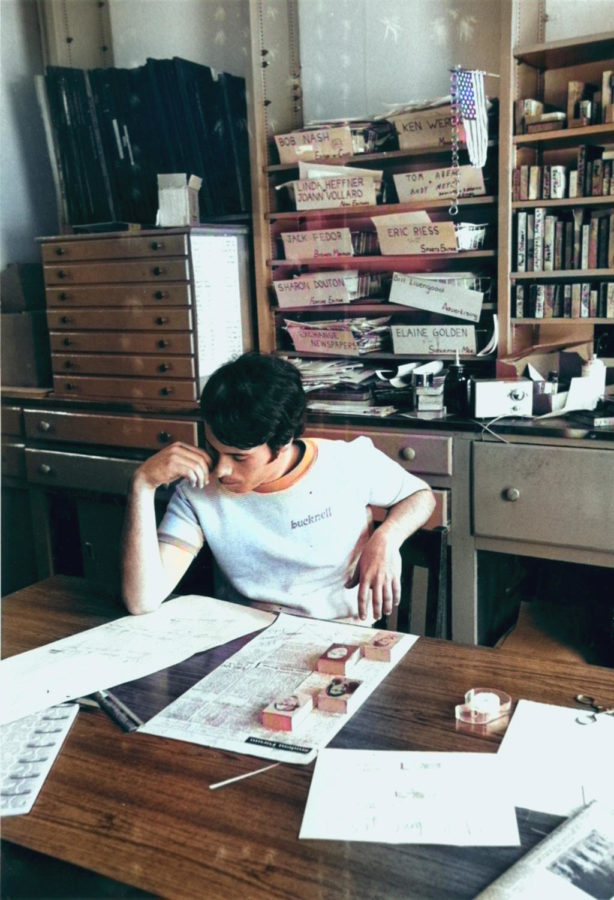

Aaron Mitchel

Christina Oddo

Aaron Mitchel, assistant professor of psychology, and Swanson Fellow in the Sciences and Engineering, is currently researching how we depend on more than sound to figure out what someone else is saying. On Mitchel’s Faculty Story page on the University Website, Mitchel describes that the way we react when we cannot understand what someone is saying, whether due to background noise or to the presence of a different language, begins to explain that more exists beyond auditory signals.

“We don’t live in just an auditory environment,” Mitchel said on his page. “We typically have multiple senses interacting, with very important and very rich cues coming from visual input.”

Mitchel believes we can use facial information to help figure out where a word begins and ends—this task becomes difficult because speech is a “constant flow.” Visual cues can also be used to help distinguish words that people often say differently based on sound.

Mitchel’s research is explaining how we perceive speech depending on whom we think is speaking. Mitchel came to this by having subjects distinguish ambiguous sounds coming from different speakers.

Such research can potentially and positively affect those with hearing impairments, for example. The understanding of visual cues may help these people look to overcome such challenges.

“First we have to do the basic research to know what the important visual cues are,” Mitchel said on the website.

After this, parents could potentially be trained, for example, to accentuate important visual cues to help children.

Mitchel is currently working with students Laina Lusk ’13, Adrienne Wendling ’13, Chris Paine ’14 and Alex Maclay ’15 in the lab.

Mitchel is focusing his research on using an eye-tracker to identify which facial cues are being used by listeners to learn word boundaries.

“For example, we know we use facial cues more when we’re in a noisy environment (you can think of trying to understand someone in a crowded restaurant–to understand someone you need to look at their face, and specifically look at their mouth), and so in the future I hope to introduce noise to the audio stream to see how that changes the recruitment of facial cues,” Mitchel said. “This may, much further down the road, have implications for individuals with cochlear implants, who have great difficulty segmenting speech on the basis of auditory cues.”

Mitchel became interested in this research topic based on his previous research on language acquisition. Mitchel noticed that this field was exclusively focused on the auditory input to learners, and recognized that there is more to language than this.

In order to fully comprehend the environment in which we learn, Mitchel believes it is more than necessary to consider all of the input available for language learners. This concept stood out the most for Mitchel when he attended a talk at the major conference on language development.

“The presenters were showing a video of a parent-infant interaction, and they only described the nature of the auditory input (it was a study on infant-directed speech), even though right there in the video the infant was fixated on the mother’s face, intently watching every lip movement and head bob,” Mitchel said. “Clearly, infant directed speech wasn’t just about shaping the auditory input, there was something very important going on in the face! Indeed, a study (by different authors–I wish I had thought of this) in 2010 found that speech-relevant facial cues are exaggerated in infant directed speech, suggesting an important learning benefit for visual speech.”

This is just one line of Mitchel’s research, and he looks to expand these ideas in future studies.