What’s Your Score: predictive policing raises issues

December 1, 2021

Discriminatory predictive policing algorithms are frequently used to identify potential criminals, areas of high criminal drug use and assign risk scores. It normalizes racism in society and in the criminal justice system and it needs to be stopped.

The police are knocking on your door. Are they looking for you? A family member? You didn’t commit any crimes, so why are they here? Well, it turns out that a predictive policing algorithm determined that you were on their new “heat list.” This list was meant to warn officers of people who were predicted to be involved in violent crimes – and now they are investigating you. According to their algorithm your race, gender, location and socioeconomic status lead to the belief that you had the “profile of a criminal.” Again, you didn’t do anything wrong, so how can this be fair? The answer is that it is not, and issues with the information used to determine this are already standing out. An algorithm like this is setting up for systemic racial biases to not only be continued but normalized through its use.

This is what happened to many in Chicago in 2014, where heat lists had been in practice for years. People were targeted, profiled and treated as someone who had the potential to be involved in a crime, leading the police to show up at their front door. This inevitably raises serious concerns, some over how the algorithm is targeting the individuals and some over where and how else this is being applied. If they wanted to conduct a search on your house, how much suspicion do they need and would it be lawful? Lots of questions surface that all lead back to what the algorithm did to come to this conclusion. Because of this, a lot of trust is placed in its veracity and its implications are threatening.

More concern emerges when looking at predictive policing used to identify areas with higher criminal activity. The PredPol algorithm is a learning algorithm that uses data sets of previous police recorded data on drug arrests to flag ‘hotspots’ in Oakland, Calif. that would have the highest drug activity. This would prompt officers to patrol those areas more in the following days. It was tested on a synthetic population and was found that it was targeting black people at “roughly twice the rate of whites” Kristian Lum said, a lead statistician at the Human Rights Data Analysis Group, despite the fact that all the different racial groups were estimated to be about equal. This will also create a negative feedback loop of officers patrolling the same areas as they will see more activity in areas that they patrol more, which continues to target the people living there. While this all may have a good intention of getting criminals off the streets, it ultimately helps hide the underlying discriminatory issues that are being created as a result.

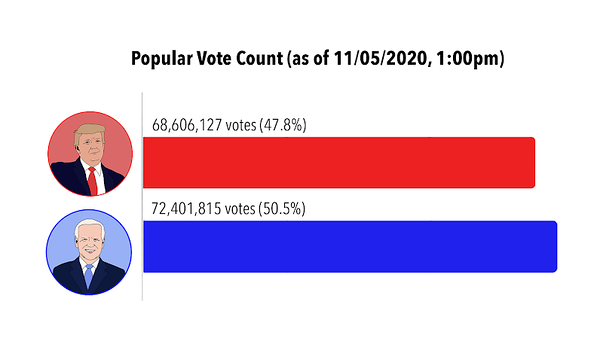

Say someone now gets arrested because more police than usual were patrolling the area, and it’s time for them to determine bail and another algorithm comes into play. The abundance of algorithmic generalizations so far in the criminal justice system is alarming, but automation is the way our world is working towards, right? This algorithm’s goal determines flight risk based on a series of questions and criminal history data. It was also only 61 percent accurate, which is just barely better than a coin flip as ProPublica journalist Julia Angwin said. “The formula was particularly likely to falsely flag black defendants as future criminals, wrongly labeling them this way at almost twice the rate as white defendants.” And while White defendants are more frequently to be mislabeled as low risk, Black defendants were 77 percent likely to be given a higher risk score. A high-risk score could mean no bail for the defendant. All of this is unfair and normalizes systemic issues such as the disproportionate amount of black people incarcerated.

The inaccuracies and biases surfaced from predictive policing algorithms should not allow them the jurisdiction to be used practically. Implementing these algorithms into the criminal justice system would make systemic racism worse. It would cement current biases against people of color into our future and continue to disproportionately affect them. Algorithms have the potential danger for people to say “an algorithm was calculated that way” so it must be correct, which normalizes these biases and perpetuates discrimination. While it should not be used for the future, a possible use could be to look back at the past. It could be possible to use these algorithms to identify and address problems that we may not have a full understanding of so that we can make changes to better ourselves and the future. This way, no one will come knocking on your door from some biased allegations made by a biased algorithm.