In 2022, the advancements of Artificial Intelligence (AI) were made commercially available through ChatGPT. Many across the US – and the world – would experiment with the technology and ask the AI varieties of silly and absurd questions. But genuine curiosity would shift to a course of maliciousness; it just so happened that AI coincided with the mass emergence of virtual homework and classwork assignments and students used AI to write papers, solve math problems and answer other complex questions. And as educators were dealing with a hidden struggle of differentiating AI from human work, the technology quickly gained a reputation as an enemy of the American education system. However, I believe that this perception of AI as a dishonest tool is self perpetuated by the education system and that it doesn’t have to be that way.

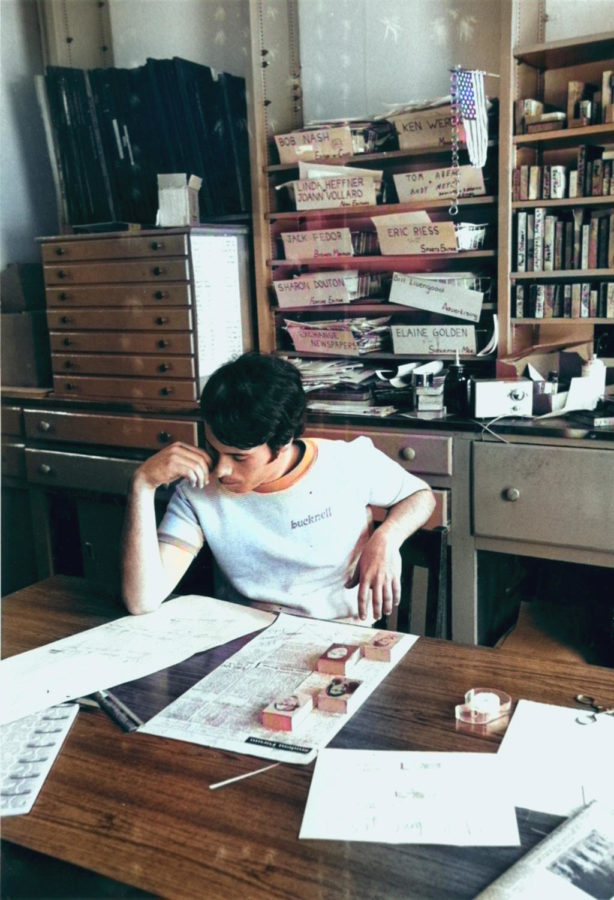

During my time here at Bucknell, I have not encountered a class syllabus that doesn’t include a section on the use of AI. Like attendance and the course schedule, it seems AI has found a regular spot in introduction material. The syllabus usually warns that AI will be found using some sort of scanner, and that it would be treated as if it were plagiarism. This very reaction of intense investigations and strict punishments is an indicator of the ballooning AI paranoia among the Bucknell administration–and I understand. I can see how such a powerful technology can be seen as a threat to the very pillars of education. However, we cannot allow AI to become an abstract idea of something that is all-knowing and infinitely destructive to education.

Yes, ChatGPT and other systems can churn out 2,000 word essays with the ease of a knife cutting through butter, but the content created by the AI must be examined. Most of the time AI creates rather lackluster papers which always include at least one of the following qualities: poor grammar, disorganized paragraph structures, general blanket statements, repeating words, plagiarized and fake sources, and blatantly incorrect information. The effort to revise an AI generated essay to make it even close to submittable is perhaps more than just doing your own work. In addition to horrid quality, there is also a silent fear among average honest Bucknellians of being flagged for using AI. As a result of the paranoid administration, similar values often trickle down into some professors; attempting to aid the administration, some professors feel pressured to convict earnest Bucknellians ‘flagged’ by their own faulty AI powered AI scanners. At the end of the day, it is extremely difficult for the scanners to determine the difference between original human work and original AI work – especially in creative writing classes.

So now what? The Bucknell administration is swinging in the dark to combat the alleged boogieman of AI, while hardworking Bucknellians are made victims as scanners are still struggling to differentiate what is and isn’t AI–all during which the ‘feared’ technology fabricates dull products. I think that instead of trying to employ isolationist measures of shielding Bucknell from AI, the administration should realize the helpful qualities of AI for aiding the development of work–not making it. For example, AI can be quite creative, and potentially there can be workshops to help promote creative writing using out-of-the-box prompts from AI. Another idea can be using AI to promote time management through an AI powered scheduling system. Or possibly even the use of AI to make personalized worksheets for students to enhance academic engagement. The point is, AI has endless potential. While it can be used for deception, it can also be used for creativity and exploration. And while the Bucknell administration can employ tactics of dissuasion and punishment, I can assure you that students will continue to use the technology. AI is here to stay. And Bucknell’s administration should take the initiative to co-exist with the technology, and not prolong a doomed movement to completely eliminate the use of AI from its student population.